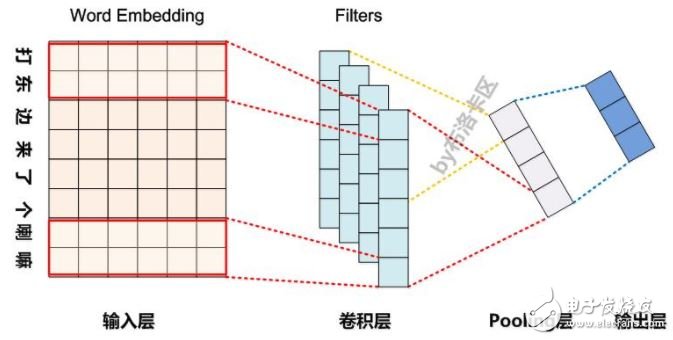

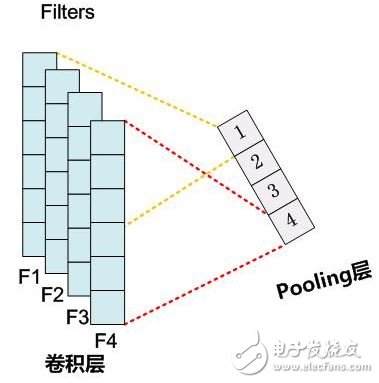

CNN is one of the most widely used deep learning models in natural language processing (NLP), alongside RNN. Figure 1 illustrates a typical CNN architecture applied to NLP tasks. Generally, words are represented using word embeddings, transforming one-dimensional text data into a two-dimensional input format. Assuming the input sequence contains m words, and each word embedding has a dimension of d, the input becomes an m×d matrix. Figure 1: Typical network structure of CNN model in natural language processing As shown, since sentence lengths vary in NLP, the input size for CNN is not fixed, depending on the number of words (m). The convolutional layer serves as a feature extraction layer, where hyperparameters like the number of filters (F) can be set. Each filter acts as a sliding window with a size of k×d over the input matrix, where k represents the window size and d is the embedding dimension. As this window slides across the input, it extracts features through non-linear transformations, generating a feature vector for that filter. Multiple filters operate independently, each capturing different patterns from the input text. The pooling layer then reduces the dimensionality of these extracted features, typically by taking the maximum value (Max Pooling), resulting in a compact representation. Finally, a fully connected layer processes these pooled features to perform the classification task. Convolution and pooling are the two core components of CNN. In the following sections, we will focus on common pooling techniques used in NLP applications. Max Pooling Over Time in CNN Max Pooling Over Time is the most commonly used downsampling method in CNNs for NLP tasks. It selects the highest value from the feature map generated by a filter, discarding all other values. This approach emphasizes the strongest feature, but ignores weaker ones, which may contain useful information. One key advantage of Max Pooling is its ability to provide translation and rotation invariance. This is particularly beneficial in image processing, where the position of a feature is less important. However, in NLP, location information often matters—such as the subject appearing at the beginning of a sentence or the object at the end. Max Pooling tends to lose such positional cues, which can be a drawback in certain tasks. Another benefit is that Max Pooling reduces the number of parameters in the model, helping to prevent overfitting. By reducing high-dimensional feature maps to single values, it simplifies subsequent layers, making the model more efficient. Additionally, Max Pooling helps handle variable-length inputs. Since the final fully connected layer requires a fixed number of neurons, Max Pooling ensures that the output from each filter is a single value, allowing the network to maintain a consistent structure regardless of input length. Figure 2: The number of neurons in the Pooling layer is equal to the number of Filters Despite its advantages, Max Pooling has notable drawbacks. First, it completely discards spatial or temporal information, such as where a feature occurs in the sequence. Second, if a feature appears multiple times, Max Pooling only retains the maximum value, losing the frequency or strength of repeated occurrences. This can be a problem when the frequency of a feature is important, such as in TF-IDF calculations. In many ways, identifying the weaknesses of a model is the first step toward improvement. Just as “crisis†can mean both danger and opportunity, recognizing flaws in a model opens the door to innovation. To address the limitations of Max Pooling, researchers have proposed several enhanced pooling methods. One of the most common is K-Max Pooling, which we will explore next. Ac Pumps,Dc Pumps,Ac Air Pump,Electric Air Pump Sensen Group Co., Ltd.  , https://www.sunsunglobal.com